Are these random numbers really random?

About this vulnerability

Roughly half a year ago, I discovered that Pubu uses a very simple random number generator to create random IDs for its books. This allows an attacker to easily predict the IDs of pages of a book and download them without purchasing them. As a result, all books on Pubu (~300K books) can be stolen, and they are worth around 43 million new Taiwan dollars (~$1.4M).

After I discovered this vulnerability, I reported it to HITCON ZeroDay on 2023/03/25, and HITCON ZeroDay reported this to Pubu two times in April. Unfortunately, Pubu did not respond to this, and the vulnerability is still not fixed yet. Currently, the details and the proof-of-concept (PoC) implementation of this vulnerability are published, and maybe someone in the wild is already stealing all the books on Pubu. I need to stress that writing this post is not to provide guidance on stealing things. Using this vulnerability to download books may violate the law.

The main purpose of this blog post is to (once again) raise Pubu's attention about this issue, and I hope they can fix this issue in no time.

In the rest of this post, I will first give an overview of how this vulnerability works, and then provide the details about the issues in Pubu's web design and how I reverse-engineered their random number generator. After that, I will talk about the proof-of-concept implementation and give some potential defense schemes.

How does it work?

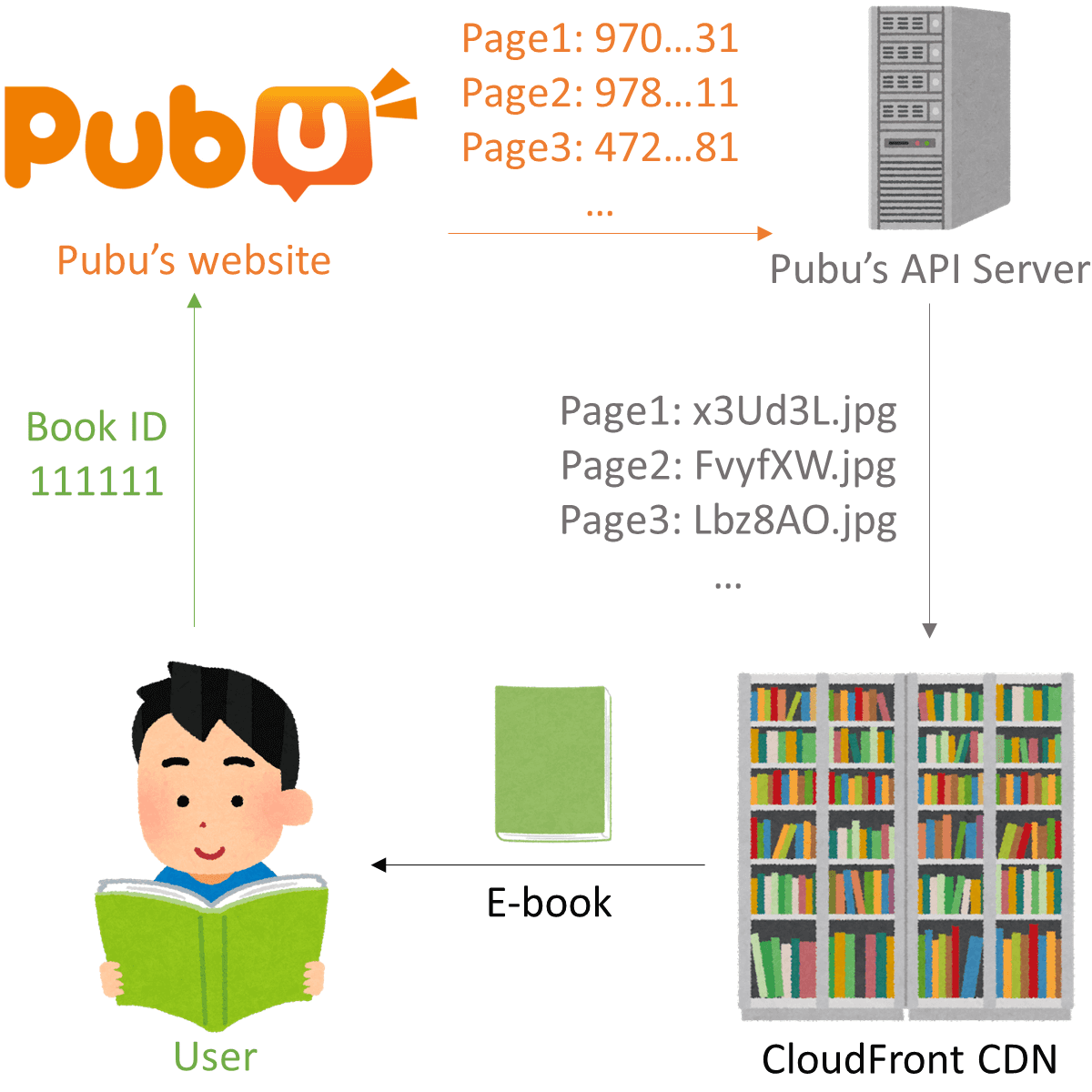

When a user reads a book on Pubu, Pubu first asks its API server for the filenames of the pages of the book using these pages' IDs. After that, these pages are downloaded from CloudFront (a content delivery network) and delivered to the user.

This procedure looks fine, but it has two big problems. First, while the page ID looks very long and random (e.g., 970338046443724931 and 472388846439747981), surprisingly, it is short and predictable. Second, the API server is open to the public and responds to queries for any books.

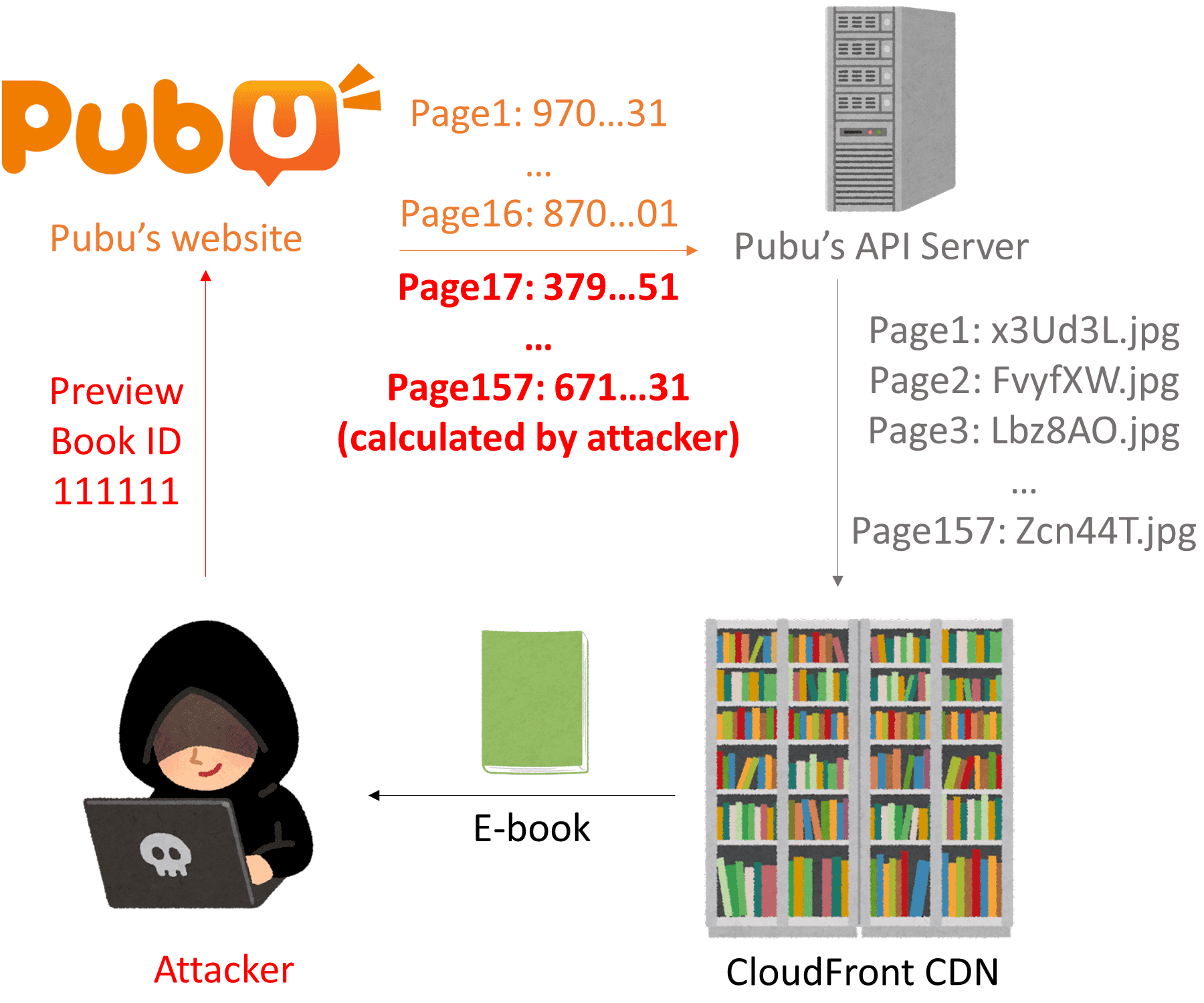

Consequently, when an attacker knows the page IDs of some pages (one is enough), the attacker can predict the rest of the pages and download the book.

But how can an attacker get some page IDs of a book? (Un)fortunately, Pubu provides a preview functionality that allows the user to read some of the pages of a book, and the attacker can utilize this to get some page IDs.

To sum up, the attacker can steal a book by:

- Preview a book and get some of the pages.

- Calculate the page IDs of the other pages.

- Query the API server for the filenames and download them.

Details of the attack

Now that we know how an attacker can steal a book, let's take a closer look at each attack step.

Preview information

The attack begins by previewing a book, which only allows the attacker to read some sample pages, but these pages are sufficient to perform an attack. If we capture the network traffic when we preview a book, we will see the browser fetching data from the API server through the URL:

https://www.pubu.com.tw/api/flex/3.0/book/[book-id]?referralType=RETAIL_TRY

The API server responds with a JSON file containing the sample pages and some metadata of the book. Here is the preview information of the book with ID 365589:

{"book": {"pages": [{"pageNumber": 15,"id": "854173581359938562","version": 0},{"pageNumber": 16,"id": "454163985352487572","version": 0},{"pageNumber": 17,"id": "357113981320723572","version": 0}],"totalPage": 32,"hasPermission": false,"documentId": 300923,"title": "工商時報 2023年6月21日","storeId": 568803,"direction": 1},"isLogged": true,"key": "26C071CB680DB1A1C190375723D128B7"}

The most important fields of the preview information are:

- id: the id of a page

- totalPage: the number of pages this book contains

- documentId: the document ID of a book (will come back to this later)

We can see that we only have the id of a page instead of the actual data, so there must be some other traffic that fetches the page data.

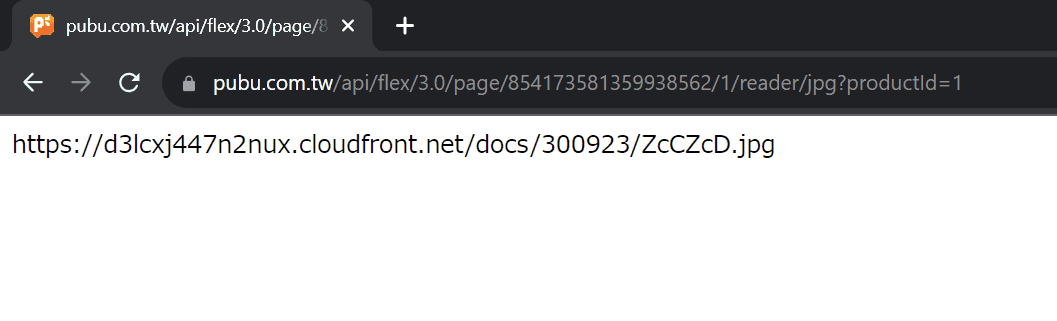

Soon after the network traffic of the preview information, we will see more requests to the API server, sending to the following URLs:

https://www.pubu.com.tw/api/flex/3.0/page/854173581359938562/1c61eeb88aaf54eaaea283f722fa892f/reader/jpg?productId=365589https://www.pubu.com.tw/api/flex/3.0/page/454163985352487572/773c20a4b3703dfd937fb0c5777c27c2/reader/jpg?productId=365589https://www.pubu.com.tw/api/flex/3.0/page/357113981320723572/56236a4fafa7fc968633dde537acdb74/reader/jpg?productId=365589

These requests will have the following responses:

https://d3lcxj447n2nux.cloudfront.net/docs/300923/ZcCZcD.jpghttps://d24p424bn3x9og.cloudfront.net/docs/300923/qJhMcf.jpghttps://d3rhh0yz0hi1gj.cloudfront.net/docs/300923/OFB9XS.jpg

These are the URLs to the actual data of the pages on CloudFront.

If we send these requests without any cookies, such as sending them in private browsing mode, we will still get the same responses. This means that the API server only checks the request URL, and we can get the page data as long as we know the correct requests to these pages.

But how can we generate a valid request? These request URLs look long and complicated, and it seems impossible to forge a valid request.

Before we give up, let's first look at the first big number in the request URLs. If we compare it with the preview information, we can see that these numbers are the page IDs. So we only need to find out the pages' IDs to construct this part of the URL.

Let's move on to the next long hexadecimal strings in the URLs. To figure out what they are, we can reverse-engineer the JavaScript code that generates it. Long story short, this string is the MD5 hash of the metadata of the book and the page. Therefore, we can generate this string by... wait a second, what will happen if we just put a 1 over there?

Well, it still works. The value of the productId field also does not matter, and it can be replaced by 1. As a result, we only need the page ID to construct the request URL and get the page data. We already know the page IDs of the sample pages from the preview information, but the IDs of the other pages remain unknown. So the next step is: how to generate these long and random-looking page IDs? (But are they really random?)

Generate the page IDs

This is the most difficult step to achieve this attack. Since the page IDs of the sample pages are provided by the preview information, there is no JavaScript code we can analyze to reverse-engineer the calculation of the page ID. But don't worry, let's look at the page IDs that we already know:

15: 85417358135993856216: 45416398535248757217: 357113981320723572

These numbers look a bit random, but they are also not very random. They always end in 2, and the second digit is always 5.

There is something more interesting: if we fetch the preview information again, these page IDs will change, and we will get a different set of page IDs. The following are the different IDs of page 15 after I fetched the preview information for 15 times. I separated the digits into different columns to show that some digits are changing while others are constant.

A B C D E F G H I J K L M N O P Q R8 5 4 1 7 3 5 8 1 3 5 9 9 3 8 5 6 27 5 1 1 3 3 8 8 0 3 8 9 0 3 6 5 3 27 5 4 1 5 3 9 8 5 3 5 9 1 3 3 5 0 27 5 1 1 5 3 0 8 7 3 1 9 3 3 5 5 3 20 5 9 1 3 3 9 8 9 3 1 9 4 3 8 5 5 26 5 8 1 2 3 1 8 9 3 0 9 1 3 8 5 2 28 5 9 1 1 3 1 8 1 3 3 9 7 3 4 5 3 23 5 4 1 6 3 6 8 9 3 1 9 5 3 0 5 7 20 5 8 1 9 3 5 8 6 3 9 9 4 3 9 5 3 24 5 0 1 6 3 0 8 6 3 3 9 2 3 3 5 5 26 5 4 1 5 3 5 8 2 3 5 9 6 3 6 5 7 26 5 3 1 4 3 5 8 4 3 8 9 2 3 5 5 8 21 5 7 1 6 3 4 8 2 3 5 9 9 3 4 5 8 29 5 0 1 3 3 1 8 5 3 0 9 5 3 9 5 1 21 5 4 1 4 3 6 8 6 3 9 9 5 3 2 5 7 2

The numbers on columns B, D, F, ..., and R always have the same number, while other numbers change randomly. Maybe there exist certain rules for these random numbers, but if we replace them with zero (or other numbers) and send the query to the API server, we will still have the same result. This shows that only the even digits (on columns B, D, F, ...) matter.

Let's revisit the page IDs of the preview pages and only focus on the digits that matter. Here are the page IDs of the preview pages with random digits removed:

B D F H J L N P R15: 5 1 3 8 3 9 3 5 216: 5 1 3 8 3 2 8 5 217: 5 1 3 8 3 0 2 5 2

Only the digits on columns L and N are different. We can guess that maybe the page id is increasing, and this increment affects the values in columns L and N. However, we need more information to figure out the real calculation happening here.

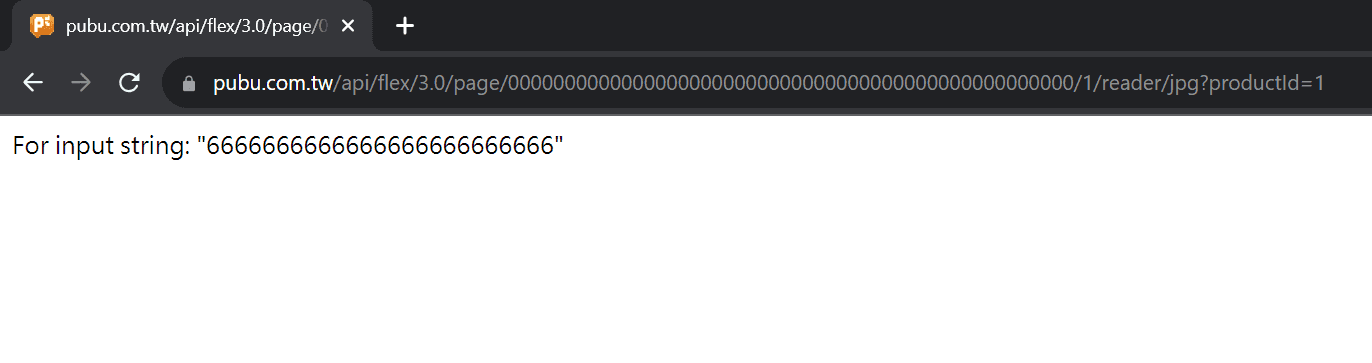

We can preview other books and get more page IDs, but we can also poke the API server and see its reaction. For example, we can query a page id with 50 zeros:

The error message is pretty interesting. It responds with a string of 6, which has a length of 25. This aligns with our guess that half of the digits do not matter and will be removed, but why it responds with 6 is still not clear.

We can also send queries of 50 ones, twos, ..., and see what will happen. Here are the results:

Query => Error--------------------50 * "0" => 25 * "6"50 * "1" => 25 * "1"50 * "2" => 25 * "4"50 * "3" => "the pageId value must greater than zero"50 * "4" => 25 * "8"50 * "5" => 25 * "9"50 * "6" => 25 * "2"50 * "7" => 25 * "5"50 * "8" => 25 * "7"50 * "9" => 25 * "3"

Now we can see that when the API server receives our query, it will remove the redundant digits and "translate" the rest into other numbers. For example, the number 0 will be translated into 6, and 4 will be translated into 8. The error message of 3 is a bit different. This is because it is translated into 0, and so our query will become zero, and the API server only accepts a page ID greater than zero.

So if we use these results and translate 98765432109876543210 back to 54807296135480729613, add the redundant digits, and query this ID, the error message should be 98765432109876543210, right? Let's try this.

Sadly, our guess is wrong. The error message is 10987654321098765432 instead of 98765432109876543210. But our guess is not terribly wrong. The error message still contains 9876543210 while in a different order. Maybe there is some permutation applied to the page ID? We can verify this by querying with 19 1s and one 2. For each query, we place the number 2 on different columns and see where it ends up being.

A B C D E F G H I J K L M N O P Q R S T A B C D E F G H I J K L M N O P Q R S T2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 => 1 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 11 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 => 1 1 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 11 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 => 1 1 1 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 11 1 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 => 1 1 1 1 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 11 1 1 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 => 1 1 1 1 1 1 2 1 1 1 1 1 1 1 1 1 1 1 1 11 1 1 1 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 => 1 1 1 1 1 1 1 2 1 1 1 1 1 1 1 1 1 1 1 11 1 1 1 1 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 => 1 1 1 1 1 1 1 1 2 1 1 1 1 1 1 1 1 1 1 11 1 1 1 1 1 1 2 1 1 1 1 1 1 1 1 1 1 1 1 => 1 1 1 1 1 1 1 1 1 2 1 1 1 1 1 1 1 1 1 11 1 1 1 1 1 1 1 2 1 1 1 1 1 1 1 1 1 1 1 => 1 1 1 1 1 1 1 1 1 1 2 1 1 1 1 1 1 1 1 11 1 1 1 1 1 1 1 1 2 1 1 1 1 1 1 1 1 1 1 => 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 1 1 1 1 11 1 1 1 1 1 1 1 1 1 2 1 1 1 1 1 1 1 1 1 => 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 1 1 1 11 1 1 1 1 1 1 1 1 1 1 2 1 1 1 1 1 1 1 1 => 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 1 1 11 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 1 1 1 1 => 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 1 11 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 1 1 1 => 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 11 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 1 1 => 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 11 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 1 => 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 11 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 => 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 11 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 => 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 21 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 => 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 11 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 => 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

So the permutation is actually very simple. It only moves the last two digits to the front, which means it is rotating the number to the right by two digits. We can verify this by adjusting our query 54807296135480729613 to 80729613548072961354 and see whether the error message is 987654...3210.

It works!

In summary, the API server will transform the query with three operations:

- Remove the redundant digits.

- Translate the digits.

- Rotate the digits to the right.

Now let's apply these operations to the page IDs of the preview pages to simplify them and see what will happen.

15: 854173581359938562 => 94910703016: 454163985352487572 => 94910704717: 357113981320723572 => 949107064

Now the page IDs look much more reasonable. The page ID is increased by 17 when the page number is increased by 1. But there is still something missing: why 17? and what will happen if we only increase the page ID by 1?

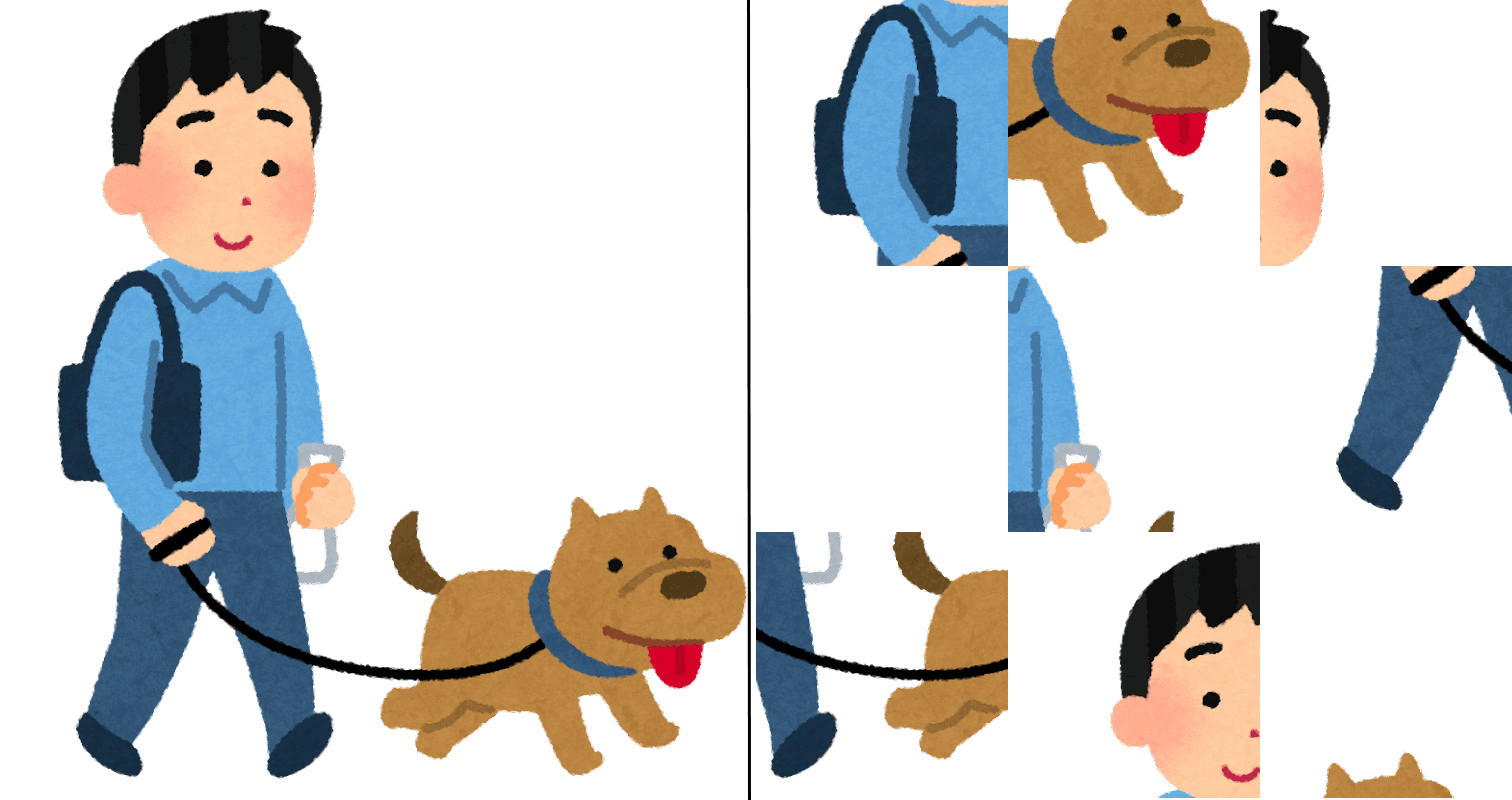

Well, this is not very important, because we already know how to predict the page ID of the other pages. (For people who really want to know what is going on, please refer to the Appendix section.) To get the page ID of page 18, we increase the page ID of page 17 by 17, rotate the digits, translate the numbers, add some extra digits to it, and then we will have 050103080304010502. If we query this page ID, we will get the following URL, which is the shuffled image of page 18.

https://duyldfll42se2.cloudfront.net/docs/300923/VvOusf.jpg

Note the page number B4 in the shuffled image. On page 17, the page number is B3, and this proves that this is indeed the next missing page that is not shown in the preview.

So now we can search the missing pages by either increasing or decreasing the page ID by 17, depending on whether we are searching for later pages or previous pages. Sometimes, the pages of a book are not continuous, meaning that we may encounter a page from other books during our search. To check whether a page is correct, we can check the documentId in the response. For example, the URL of page 18 contains the documentId of 300923, which matches with the documentId we saw in the preview information and confirms that this page is what we want.

After we found all the missing pages, we can go on to fix the image data.

Shuffle the images

When we download an image from CloudFront, it looks like a "shuffled" image, similar to the image below on the right.

To get the real page of a book, we need to perform one last step to shuffle the image back to its original form, but we need to first figure out how the image is shuffled.

If we use the inspect tool in Chrome and check the initiator that sends the requests to CloudFront, we will see the JavaScript file, canvasReader.js. Inside this JavaScript file, there are several functions that handle the rendering and processing of page images. Among them, the decode and newDecode functions are responsible to decode the shuffled image back to normal. There is nothing complicated here. They just crop the image into small blocks and paste them back according to the correct order of these blocks to restore the image file. We can simply follow these steps to generate the original image, and now we can download a book without paying anything.

Implementation

As a proof-of-concept, I implemented this attack using Python, and the implementation is available on GitHub. Since this vulnerability is not fixed yet, my code can still download any book on Pubu at the time I'm writing this post. But before you execute my code to download the books, I have to warn you that doing this may be against the law. I implemented and published this code only to prove that this attack really works and to provide more details about this attack.

Disclosure and potential defenses

After I discovered this vulnerability, I reported it to HITCON ZeroDay, and they notified Pubu about this before they published this vulnerability. I also reached out to Pubu and ask them to fix this before I published my PoC implementation and this blog post. But right now, Pubu is still vulnerable and anyone can easily steal all the books on their site.

If I'm an engineer working for Pubu, I probably will do the followings:

- Limit access to the API server to book buyers only.

- Enforce authentication on CloudFront to prevent unauthorized access.

- Rename all the files on CloudFront and use a secure pseudo-random number generator to generate long and secure random filenames.

I hope Pubu will fix this in the near future.

Appendix: the real page ID

We already know that the page ID is increased by 17 when the page number is increased by 1, but we still don't know why it is 17 instead of other numbers. One possible explanation is that the page ID we see is the real page ID multiplied by 17. (For clarity, I will refer to the real page ID as the page ID, and the page ID we saw earlier as the fake ID from now on.) Therefore, when the page ID is increased by 1, the fake ID is increased by 17. I took this guess because the affine cipher works this way.

In the affine cipher, the result is added or subtracted by an offset after the multiplication, which can also happen in our case here. After the multiplication, the number may be further added or subtracted by some offset to generate the fake ID. To figure out this offset, we will need to rely on the error message that shows: "the pageId value must greater than zero", which we already saw when we were figuring out the translation rules.

When we query the fake ID from 000 to 021, the API server will respond with the error message: "the pageId value must greater than zero". However, when we request the fake ID 022, the response is an HTTP error code 403.

From this result, we can see that page ID 1 is converted to 022, so the offset is 5. This means that the fake ID is the real page ID multiplied by 17 and then added by 5.

If you are not convinced by this simple experiment, keep reading.

Normally, the API server will treat the query as the fake ID of a page. However, I discovered that the API server will treat the query as the real page ID when the input of the query is less than 10000. Using this behavior, we can verify our calculation by:

- Query a page using the real page ID, say 3414.

- Convert the real page ID to a fake ID and query again.

If both the queries end up to be the same page, then our calculation must be correct.

When we query using the real page ID 3414, the API server will respond with the following page:

Let's now convert 3414 to a fake ID. First, we multiply it by 17 and add it by 5, and the result will be 58043. After that, we move the first two digits (58) to the back, and now we have 04358. Finally, we translate the digits and add some redundant zeros into it, which will generate 0302090704. Let's see the response from the API server:

The response matches with the query using the real page ID 3414, and this proves that our calculation is correct.